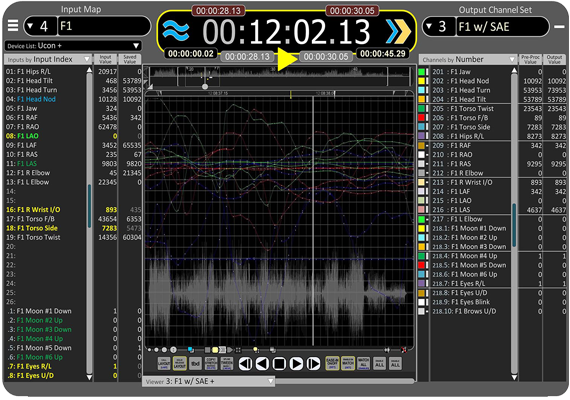

When I left WDI in 2002 the first thing I did was build a tactile unit of my own (with the help of a talented embedded system engineer). I wanted to experiment with applying the same methods I'd come to appreciate in animatronic editing to CG character animation. One of the criteria I considered important was being able to pose a whole character without a bunch of UI interaction. Although film rigs might have hundreds of controls, game rigs have to be more efficient usually, so I settled on 64 analog inputs.

Implementing a system was problematic though. Maya wasn't yet really ready to support real-time editing. I'd been using MotionBuilder since early FilmBox versions and focused on taking advantage of its real-time recording and playback core. What I found was that the way MB exposed those features - designed to support mocap - imposed constraints that made it impossible to achieve an efficient workflow. Doing charcter animation with this type of system requires having solid control over control selection and an efficient way to apply a sampled recording to the primary animation layer. After a number of plug-in development rounds, I simply couldn't streamline the workflow enough to make it worth chosing over hand-keying. To make tangible progress, I needed a solid content-creation platform to work with. That took a decade longer than seemed necessary, but CG CC software development is naturally driven by the large-market pipelines, which continue to be narrowly focused on the hand-key process. Only recently has the primary content-creation tool reached a point that it can really support directly capturing and manipulating real-time input. Autodesk's strategy to retire MB is, IMO, an indication that they now view Maya as a viable real-time platform - finally!

The console pictured here looks like it was built in a garage because, well, it really was. I started with an old audio mixing board, put a wooden frame around it to get the rake I wanted without having to machine parts, and personally soldered hundreds of points to wire it up - and by some miracle it was 100% the first time I turned it on! This was intended as a learning tool, so I wasn't too concerned about aesthetics - it's a dinosaur, but still works 20 years later.